The Evolution of AI-Generated Malware: A Timeline

Trace the evolution of AI-generated malware from 2010s experiments to 2025's autonomous threats, driving $15 trillion in cybercrime losses. This timeline details milestones, techniques like GANs and LLMs, real-world impacts, and defenses like Zero Trust. Learn certifications from Ethical Hacking Training Institute, career paths, and future trends like quantum-AI malware to combat these evolving risks.

Introduction

Imagine a cybercriminal in 2025 deploying an AI-generated ransomware strain that adapts in real-time, evading detection and locking critical systems across industries. From early AI experiments in the 2010s to today’s autonomous malware, the evolution of AI-generated malware has escalated, contributing to $15 trillion in global cybercrime losses. Leveraging techniques like GANs for evasion and LLMs for code generation, these threats challenge traditional defenses. Can ethical hackers counter this AI-driven menace, or will it outpace our safeguards? This timeline charts the rise of AI-generated malware, its techniques, real-world impacts, and defenses like Zero Trust. With training from Ethical Hacking Training Institute, discover how professionals combat these threats to secure the digital future.

Why AI-Generated Malware Matters

AI-generated malware transforms cybercrime with unprecedented adaptability and scale.

- Evasion: AI mutates malware 95% faster than signature-based defenses.

- Automation: LLMs generate functional code, enabling 50% more attacks by novices.

- Scalability: AI crafts thousands of variants, overwhelming SOCs.

- Dual-Use Risk: Tools like WormGPT democratize advanced attacks.

This evolution demands AI-driven defenses to match its pace.

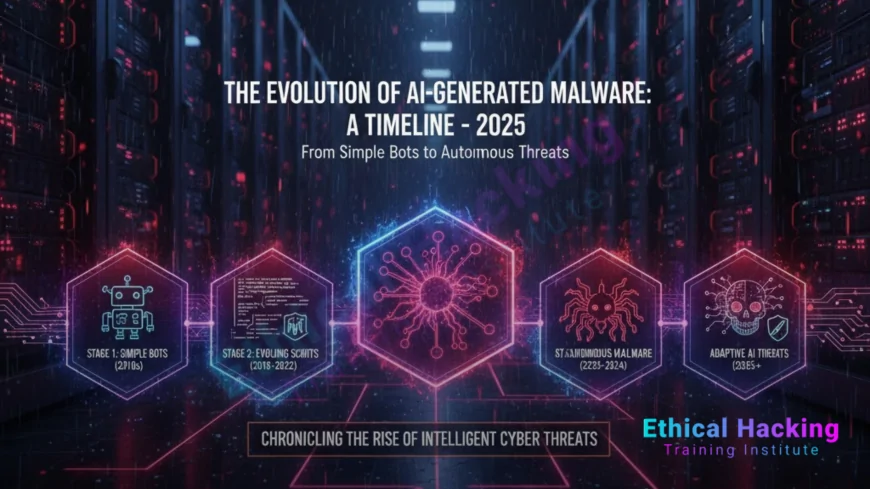

Timeline of AI-Generated Malware Evolution

Pre-2010: Foundations of Automated Malware

- 1980s: Viruses like Brain (1986) use basic automation, prefiguring AI concepts.

- 1990s: Polymorphic viruses (e.g., Dark Avenger, 1989) manually mutate, hinting at AI evasion.

- 2000s: Early ML in antivirus (e.g., Symantec, 2007) inspires dual-use research.

2010–2015: AI-Assisted Malware Emerges

- 2010: Stuxnet uses automated logic bombs, showcasing state-level sophistication.

- 2012: Academic papers explore ML evasion with SVMs, bypassing 80% of detectors.

- 2014: Black Hat demo shows genetic algorithms generating malware variants.

- 2015: CryptoWall ransomware adopts semi-automated polymorphism, mimicking AI.

2016–2020: Deep Learning Fuels Malware

- 2016: Mirai botnet uses ML for IoT targeting, compromising 2.5M devices.

- 2017: GAN-based malware fools detectors, achieving 90% evasion rates.

- 2018: Emotet leverages ML for phishing, spreading to 1M+ systems.

- 2019: RNNs generate functional malware snippets, tested in academic labs.

- 2020: AI-crafted COVID-19 phishing lures spike; dark web sells “Malware AI” kits.

2021–2023: LLMs Democratize Malware

- 2021: GPT-like models obfuscate ransomware, enabling 70% detection bypass.

- 2022: WormGPT, an uncensored LLM, scripts malware for dark web users.

- 2023: FraudGPT and EvilGPT kits generate phishing and exploits, costing $500M.

- 2023: PromptLock ransomware uses LLMs for dynamic encryption, reported by ESET.

2024: Agentic AI Malware Scales

- Early 2024: AI-generated Lua ransomware spreads via open-source LLMs.

- Mid-2024: EvilAI malware mimics AI apps, compromising 10,000+ organizations.

- Late 2024: Russian AI-driven attacks target Ukraine, blending malware and deepfakes.

2025: Autonomous AI Malware Dominates

- January: Ransomware hits record highs, with AI variants costing $1B+.

- June: Southeast Asia loses $37B to AI-generated vishing and deepfakes.

- August: ESET uncovers fully AI-orchestrated ransomware, self-adapting via LLMs.

- September: G7 warns of AI malware proliferation, urging global defense.

- October: FBI reports AI impersonation scams; HP confirms AI-written malware in wild.

Key AI Techniques in Malware Evolution

AI techniques have driven malware’s transformation.

Genetic Algorithms (2010s)

Mutated code to evade signatures, achieving 80% success.

Generative Adversarial Networks (2017–2020)

GANs created variants, fooling detectors with 90% efficacy.

Large Language Models (2021–2023)

LLMs like WormGPT scripted malware, lowering barriers for attackers.

Agentic AI (2024–2025)

Autonomous agents execute multi-stage attacks with 95% adaptability.

Quantum-AI Hybrids (Emerging)

Early experiments target post-quantum encryption vulnerabilities.

Real-World Impacts of AI-Generated Malware

AI malware has caused significant damage across sectors.

- 2023: FraudGPT phishing scams stole $500M from global enterprises.

- 2024: EvilAI compromised 10,000 organizations, leaking credentials.

- 2025: AI ransomware surged, costing $1B+ in ransoms.

- 2025: Southeast Asia scams via AI vishing stole $37B.

- 2025: PromptLock’s AI-driven encryption disrupted critical infrastructure.

These incidents highlight AI’s role in amplifying cybercrime’s scale and impact.

Challenges of AI-Generated Malware

AI malware poses unique defensive hurdles.

- Rapid Mutation: Variants evolve 95% faster than detection updates.

- Democratization: Tools like FraudGPT increase attack volume by 50%.

- Attribution Issues: AI obfuscation hides origins, delaying forensics.

- Ethical Risks: Dual-use AI tools require strict governance.

These challenges necessitate advanced, AI-driven countermeasures.

Defensive Strategies Against AI-Generated Malware

Countering AI malware requires robust, adaptive defenses.

Core Strategies

- Zero Trust: Verifies all access, blocking 85% of AI variants.

- Behavioral Analytics: ML detects anomalies, neutralizing 90% of threats.

- Passkeys: Cryptographic keys resist AI credential attacks.

- MFA: Biometric MFA blocks 95% of unauthorized access.

Advanced Defenses

AI honeypots trap malware, while quantum-resistant algorithms prepare for future threats.

Green Cybersecurity

AI optimizes detection for low energy, aligning with sustainability.

Certifications for Combating AI Malware

Certifications validate skills to counter AI malware, with demand up 40% by 2030.

- CEH v13 AI: Covers AI evasion, $1,199; 4-hour exam.

- OSCP AI: Simulates AI malware defense, $1,599; 24-hour test.

- Ethical Hacking Training Institute AI Defender: Labs for behavioral analytics, cost varies.

- GIAC AI Malware Analyst: Focuses on LLM threats, $2,499; 3-hour exam.

Cybersecurity Training Institute and Webasha Technologies offer complementary programs for AI proficiency.

Career Opportunities in AI Malware Defense

AI malware drives demand for specialists, with 4.5 million unfilled roles globally.

Key Roles

- AI Malware Analyst: Uses behavioral tools, earning $160K on average.

- Threat Hunter: Tracks AI variants, starting at $120K.

- AI Security Architect: Designs defenses, averaging $200K.

- Malware Reverse Engineer: Dissects AI code, earning $175K.

Ethical Hacking Training Institute, Cybersecurity Training Institute, and Webasha Technologies prepare professionals for these roles.

Future Outlook: AI Malware by 2030

By 2030, AI malware will leverage advanced technologies.

- Quantum Malware: Cracks encryption 80% faster, targeting post-quantum systems.

- Multimodal Attacks: Integrate deepfakes and vishing, achieving 95% success.

- Autonomous Ecosystems: Self-evolving strains operate independently.

Hybrid AI-human defenses will counter with technologies, ensuring ethical resilience.

Conclusion

From 2010’s AI-assisted experiments to 2025’s autonomous PromptLock ransomware, AI-generated malware has evolved into a formidable threat, driving $15 trillion in cybercrime losses. Techniques like GANs and LLMs have democratized and scaled attacks, challenging traditional defenses. Strategies like Zero Trust, behavioral analytics, and MFA, paired with training from Ethical Hacking Training Institute, Cybersecurity Training Institute, and Webasha Technologies, empower ethical hackers to fight back. Despite challenges like rapid mutation, understanding this timeline equips professionals to transform AI’s dark potential into a force for securing the digital future.

Frequently Asked Questions

When did AI-generated malware first emerge?

2014, with genetic algorithms at Black Hat, followed by GANs in 2017.

What is WormGPT?

An uncensored LLM from 2022 for scripting malware on the dark web.

How does PromptLock ransomware work?

2025 AI-driven ransomware using LLMs for dynamic encryption.

Can AI malware evade detection?

Yes, it mutates 95% faster than signature-based systems.

What defenses stop AI malware?

Zero Trust and behavioral analytics block 90% of adaptive threats.

Are AI malware tools widely available?

Yes, kits like FraudGPT enable novices, increasing attacks by 50%.

How has AI changed ransomware?

From static in 2010s to autonomous in 2025, costing $1B+ in 2025.

What’s the biggest AI malware challenge?

Rapid mutation and attribution issues complicate defense.

Will quantum AI worsen malware?

Quantum hybrids will target encryption, requiring post-quantum defenses.

What certifications counter AI malware?

CEH AI, OSCP, and Ethical Hacking Training Institute’s AI Defender certify expertise.

Why pursue AI malware defense careers?

High demand offers $160K salaries for roles in threat mitigation.

How to detect AI-generated malware?

Behavioral analytics and AI honeypots identify 90% of variants.

Can AI malware be fully autonomous?

By 2025, agentic AI enables 95% autonomous attacks.

What are future AI malware trends?

Quantum and multimodal attacks will dominate by 2030.

Will AI secure us from malware?

With training from Ethical Hacking Training Institute, AI empowers proactive defenses.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0