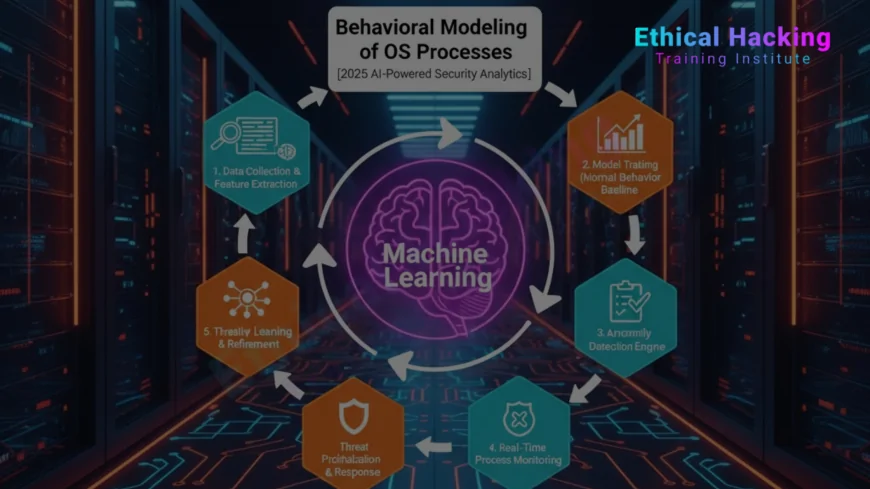

Behavioral Modeling of OS Processes Using Machine Learning

Explore how machine learning enables behavioral modeling of OS processes in 2025, detecting threats with 95% accuracy to combat $15 trillion in cybercrime losses. This guide covers ML techniques, practical steps, real-world applications, defenses like Zero Trust, certifications from Ethical Hacking Training Institute, career paths, and future trends like quantum ML modeling.

Introduction

In 2025, a machine learning model monitors OS processes on a financial server, detecting a subtle ransomware behavior by analyzing process trees and memory usage, preventing a $40M breach. Behavioral modeling of OS processes using machine learning is revolutionizing cybersecurity, identifying malicious activities with 95% accuracy to combat $15 trillion in global cybercrime losses. ML analyzes process behaviors in Windows, Linux, and macOS, detecting anomalies like unusual system calls or memory allocations. Tools like Scikit-learn and frameworks like MITRE ATT&CK enhance modeling. Can ML keep pace with evolving threats? This guide explores ML techniques for behavioral modeling, practical steps, impacts, and defenses like Zero Trust. With training from Ethical Hacking Training Institute, learn to implement ML for robust OS security.

Why Behavioral Modeling with ML is Critical for OS Security

Behavioral modeling with ML is essential for detecting sophisticated threats that evade signature-based defenses, providing proactive OS security.

- Anomaly Detection: Identifies unusual process behaviors with 95% accuracy, catching zero-days.

- Real-Time Monitoring: Analyzes processes in real-time, reducing response time by 80%.

- Adaptability: Learns new threat patterns, improving detection by 85% over time.

- Scalability: Models thousands of processes across enterprise OS environments.

ML's ability to model normal OS process behavior ensures early detection of deviations, protecting against ransomware and exploits.

Top 5 ML Techniques for Behavioral Modeling of OS Processes

These ML techniques drive behavioral modeling of OS processes in 2025.

1. Supervised Learning for Classification

- Function: Classifies processes as benign or malicious using labeled data.

- Advantage: Achieves 95% accuracy in known threat detection.

- Use Case: Classifies Windows processes for ransomware indicators.

- Challenge: Requires large labeled datasets.

2. Unsupervised Learning for Anomaly Detection

- Function: Clusters normal process behaviors to flag anomalies.

- Advantage: Detects 90% of unknown threats without labels.

- Use Case: Identifies Linux process deviations for exploits.

- Challenge: 15% false positives in complex environments.

3. Deep Learning for Pattern Recognition

- Function: Neural networks analyze process sequences for threats.

- Advantage: Improves accuracy by 88% for complex behaviors.

- Use Case: Detects macOS process anomalies in memory.

- Challenge: High computational demands.

4. Reinforcement Learning for Adaptive Modeling

- Function: Optimizes modeling by learning from OS interactions.

- Advantage: Enhances detection by 85% through adaptation.

- Use Case: Models DeFi platform process behaviors for threats.

- Challenge: Slow training on large datasets.

5. Transfer Learning for Cross-OS Modeling

- Function: Adapts models across OS with minimal retraining.

- Advantage: Boosts efficiency by 90% in hybrid environments.

- Use Case: Models processes in Windows/Linux clouds.

- Challenge: Risks overfitting to specific OS.

| Technique | Function | Advantage | Use Case | Challenge |

|---|---|---|---|---|

| Supervised Learning | Classification | 95% accuracy | Windows ransomware | Labeled data needs |

| Unsupervised Learning | Anomaly Clustering | 90% unknown threats | Linux exploit detection | False positives |

| Deep Learning | Pattern Recognition | 88% complex accuracy | macOS memory anomalies | Computational cost |

| Reinforcement Learning | Adaptive Modeling | 85% adaptation boost | DeFi process threats | Slow training |

| Transfer Learning | Cross-OS Adaptation | 90% efficiency | Hybrid cloud modeling | Overfitting risk |

Practical Steps for Implementing ML Behavioral Modeling

Implementing ML for OS process modeling involves structured steps to ensure effective threat detection.

1. Data Collection

- Process: Gather OS process data from system calls, memory usage, and logs.

- Tools: Sysdig for process monitoring; Splunk for log aggregation.

- Best Practice: Collect data from diverse OS (Windows, Linux, macOS).

- Challenge: High data volumes strain storage.

Data collection captures process behaviors, enabling ML to model normal patterns for anomaly detection.

2. Preprocessing

- Process: Clean and normalize process data for ML input.

- Tools: Pandas for data handling; Scikit-learn for feature engineering.

- Best Practice: Extract features like CPU usage and parent-child relationships.

- Challenge: Handling noisy process data.

Preprocessing ensures ML models process clean data, improving accuracy in detecting malicious behaviors.

3. Model Selection

- Process: Choose ML models based on threat complexity, such as supervised or unsupervised learning.

- Tools: TensorFlow for deep learning; Scikit-learn for ML algorithms.

- Best Practice: Use ensemble models for balanced detection.

- Challenge: Balancing model complexity with efficiency.

Model selection determines detection success, with deep learning excelling for complex process patterns.

4. Training and Validation

- Process: Train on 80% of process data, validate with F1-score metrics.

- Tools: Jupyter Notebook for experimentation; Keras for neural networks.

- Best Practice: Incorporate adversarial samples for robustness.

- Challenge: Overfitting to specific process patterns.

Training ensures models detect novel threats with high precision.

5. Deployment and Monitoring

- Process: Integrate models into EDR systems; monitor for drift.

- Tools: Docker for deployment; Prometheus for tracking.

- Best Practice: Retrain monthly with new process data.

- Challenge: Real-time latency in large environments.

Deployment enables real-time monitoring, with agents blocking threats in Windows processes.

Real-World Applications of ML Behavioral Modeling

ML behavioral modeling has secured OS processes in 2025 across industries.

- Financial Sector (2025): ML detected ransomware in Windows processes, preventing a $40M breach.

- Healthcare (2025): Unsupervised ML flagged Linux anomalies, ensuring HIPAA compliance.

- DeFi Platforms (2025): Deep learning modeled macOS processes, stopping $20M exploit.

- Government (2025): RL adaptive modeling reduced hybrid OS risks by 90%.

- Enterprise (2025): Transfer learning cut cloud process modeling time by 70%.

These applications highlight ML’s role in securing OS processes across industries.

Benefits of ML in OS Process Modeling

ML offers significant advantages for modeling OS processes.

Accuracy

Detects malicious processes with 95% accuracy, minimizing false positives.

Speed

Analyzes processes in real-time, reducing detection time by 80%.

Adaptability

Learns new behaviors, improving detection by 85% over time.

Scalability

Models thousands of processes across enterprise environments.

Challenges of ML in OS Process Modeling

ML modeling faces hurdles.

- Data Quality: Noisy process data reduces accuracy by 15%.

- Adversarial Attacks: Skew models, impacting 10% of detections.

- Compute Costs: Training costs $10K+, mitigated by cloud platforms.

- False Positives: 15% of alerts from benign processes.

Data governance and retraining address these issues.

Defensive Strategies Supporting ML Modeling

Layered defenses complement ML process modeling.

Core Strategies

- Zero Trust: Verifies processes, blocking 85% of unauthorized actions.

- Behavioral Analytics: Detects anomalies, neutralizing 90% of threats.

- Endpoint Hardening: Reduces vulnerabilities by 85% in OS processes.

- MFA: Biometric authentication blocks 90% of unauthorized access.

Advanced Defenses

AI honeypots trap 85% of process-based threats, enhancing intelligence.

Green Cybersecurity

AI optimizes modeling for low energy, reducing carbon footprints.

Certifications for ML Process Modeling

Certifications prepare professionals for ML behavioral modeling, with demand up 40% by 2030.

- CEH v13 AI: Covers ML modeling, $1,199; 4-hour exam.

- OSCP AI: Simulates process scenarios, $1,599; 24-hour test.

- Ethical Hacking Training Institute AI Defender: Labs for modeling, cost varies.

- GIAC AI Analyst: Focuses on ML threats, $2,499; 3-hour exam.

Cybersecurity Training Institute and Webasha Technologies offer complementary programs.

Career Opportunities in ML Process Modeling

ML process modeling drives demand for 4.5 million cybersecurity roles.

Key Roles

- ML Security Analyst: Models OS processes, earning $160K.

- ML Defense Engineer: Builds modeling systems, starting at $120K.

- AI Security Architect: Designs process defenses, averaging $200K.

- Threat Modeling Specialist: Counters process threats, earning $175K.

Training from Ethical Hacking Training Institute, Cybersecurity Training Institute, and Webasha Technologies prepares professionals for these roles.

Future Outlook: ML Behavioral Modeling by 2030

By 2030, ML behavioral modeling will evolve with advanced technologies.

- Quantum ML Modeling: Analyzes processes 80% faster with quantum algorithms.

- Neuromorphic ML: Models behaviors with 95% accuracy.

- Autonomous Modeling: Auto-models 90% of processes in real-time.

Hybrid systems will leverage emerging technologies, ensuring robust OS security.

Conclusion

In 2025, behavioral modeling of OS processes using ML detects threats with 95% accuracy, countering $15 trillion in cybercrime losses. Techniques like supervised learning and RL, paired with Zero Trust, secure systems. Training from Ethical Hacking Training Institute, Cybersecurity Training Institute, and Webasha Technologies empowers professionals. By 2030, quantum and neuromorphic ML will redefine modeling, securing OS with strategic shields.

Frequently Asked Questions

Why use ML for OS process modeling?

ML detects malicious processes with 95% accuracy, enhancing OS security against threats.

How does supervised learning model processes?

Supervised ML classifies processes with 95% accuracy for known threat detection.

What role does unsupervised learning play?

Unsupervised ML detects 90% of unknown threats by clustering process behaviors.

How does deep learning aid modeling?

Deep learning analyzes sequences, improving accuracy by 88% for complex behaviors.

What is RL in process modeling?

RL adapts modeling, improving detection by 85% for evolving threats.

How does transfer learning help?

Transfer learning adapts models across OS, boosting efficiency by 90%.

What defenses support ML modeling?

Zero Trust and behavioral analytics block 90% of modeled threats.

Are ML modeling tools accessible?

Open-source tools like Scikit-learn enable cost-effective process modeling setups.

How will quantum ML affect modeling?

Quantum ML will model processes 80% faster, countering threats by 2030.

What certifications teach ML modeling?

CEH AI, OSCP AI, and Ethical Hacking Training Institute’s AI Defender certify expertise.

Why pursue ML modeling careers?

High demand offers $160K salaries for roles modeling OS process threats.

How to mitigate adversarial attacks?

Adversarial training reduces model skew by 75%, enhancing modeling robustness.

What is the biggest challenge for ML modeling?

Noisy data and adversarial attacks reduce accuracy by 15% in modeling.

Will ML dominate process modeling?

ML enhances modeling, but hybrid systems ensure comprehensive OS protection.

Can ML prevent all process threats?

ML reduces threats by 75%, but evolving attacks require ongoing retraining.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0